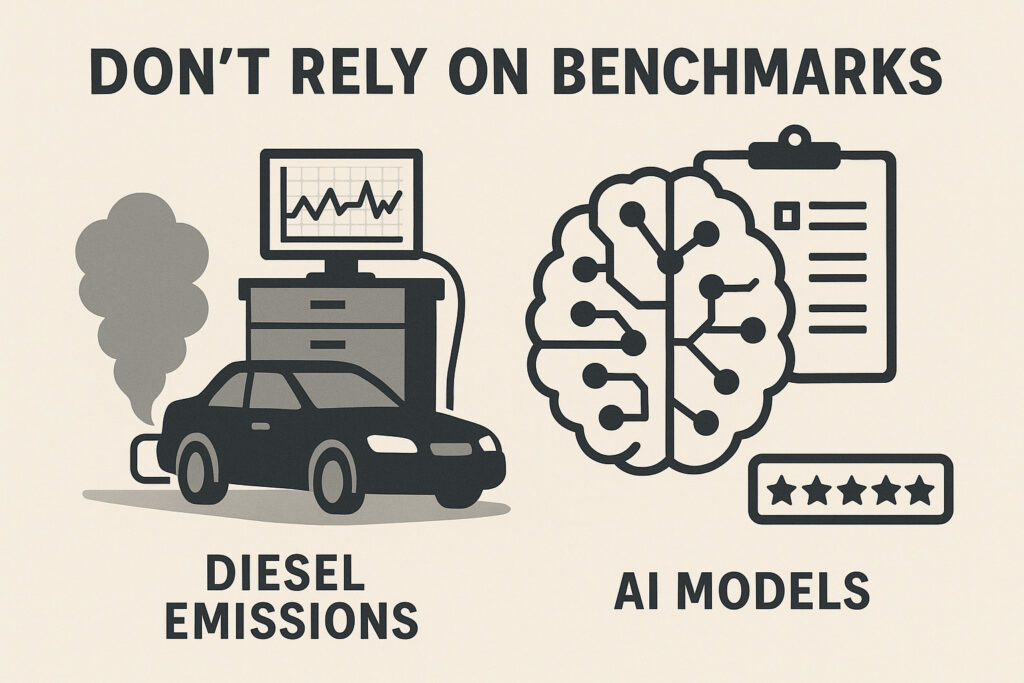

When choosing the right AI solution, relying solely on published benchmarks is risky.

It’s not much different from the old diesel emissions scandal: cars were tuned to perform perfectly in test conditions, but real-world driving told a different story.

The same happens with AI models. Each new release is often optimized to score well on existing benchmarks and tests created by others to measure performance comparisons. That doesn’t mean it will perform well on your specific use case.

What’s the alternative?

Run quick, targeted tests against your real requirements. The model that wins on paper may not be the one that delivers in practice.

Some examples:

- A language model that ranks high on reasoning benchmarks may still fail on your domain-specific information or questions.

- A computer vision model trained on public datasets may not recognize your industrial images with the accuracy you need or does not respond well to your fine tuning requirements.

- An anomaly detection system might look great in demo results but fail to detect subtle issues in your IoT or OT data.

Key takeaway:

- Stay open.

- Test multiple technologies.

- Match performance against your own data and processes.

In the end, the right AI solution is the one that works for you, not the one that tops a leaderboard.