The industrial sector holds a lot of commercially and intellectually sensitive material.

That’s why LLMs like ChatGPT, Gemini, and others are often banned in these environments. You can’t risk your data leaving the network.

But open source models have come a long way.

They now offer powerful, context-aware responses and can be run entirely offline.

✅ No cloud or accounts required

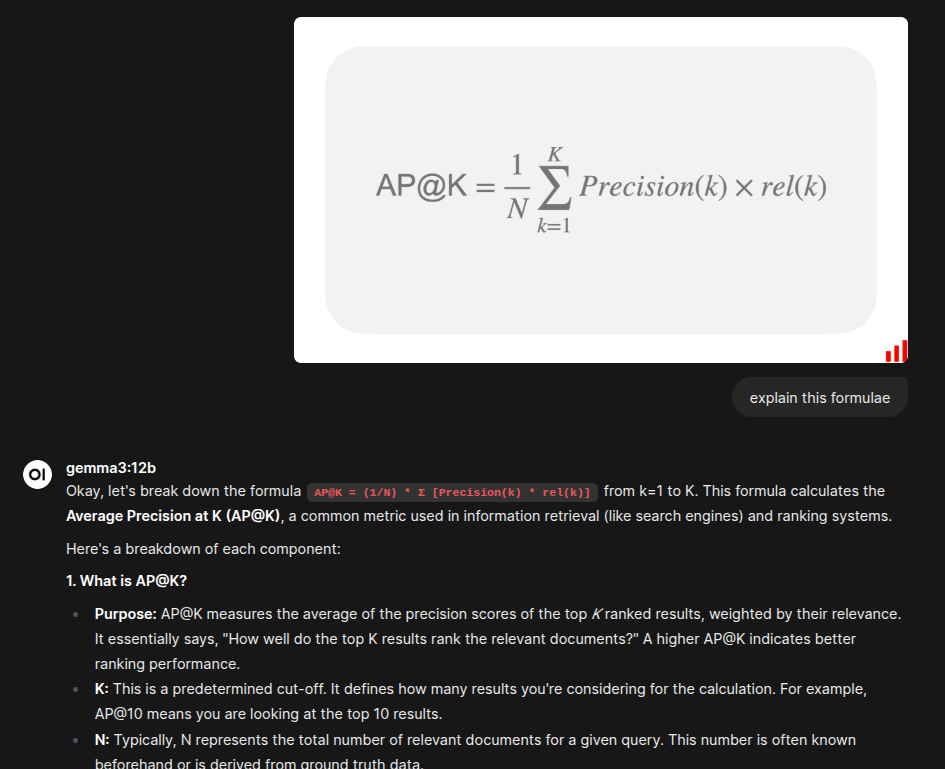

✅ Able to chat, share knowledge or interpret images

✅ Easy to setup, runs on consumer-grade NVIDIA GPUs

After testing many models with Ollama, Gemma3 from Google is standing out as the best for both speed and accuracy.

Here’s a real example of it interpreting an image locally, with no internet or external parties involved.

If you’re working with sensitive data but still want AI-level insight, this is a serious option to consider.